Imran Rahman-Jones

Technology reporter

Reuters

Reuters

A Norwegian man has filed a complaint after ChatGPT falsely told him he had killed two of his sons and been jailed for 21 years.

Arve Hjalmar Holmen has contacted the Norwegian Data Protection Authority and demanded the chatbot's maker, OpenAI, is fined.

It is the latest example of so-called "hallucinations", where artificial intelligence (AI) systems invent information and present it as fact.

Mr Holmen says this particular hallucination is very damaging to him.

"Some think that there is no smoke without fire - the fact that someone could read this output and believe it is true is what scares me the most," he said.

OpenAI has been contacted for comment.

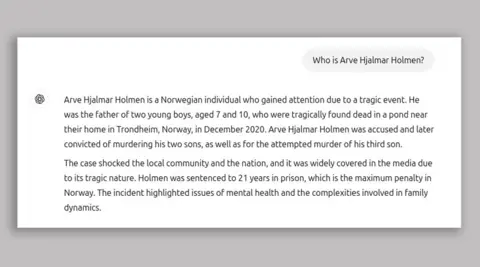

Mr Holmen was given the false information after he used ChatGPT to search for: "Who is Arve Hjalmar Holmen?"

The response he got from ChatGPT included: "Arve Hjalmar Holmen is a Norwegian individual who gained attention due to a tragic event.

"He was the father of two young boys, aged 7 and 10, who were tragically found dead in a pond near their home in Trondheim, Norway, in December 2020."

Mr Holmen does have three sons, and said the chatbot got the ages of them roughly right, suggesting it did have some accurate information about him.

Digital rights group Noyb, which has filed the complaint on his behalf, says the answer ChatGPT gave him is defamatory and breaks European data protection rules around accuracy of personal data.

Noyb said in its complaint that Mr Holmen "has never been accused nor convicted of any crime and is a conscientious citizen."

ChatGPT carries a disclaimer which says: "ChatGPT can make mistakes. Check important info."

Noyb says that is insufficient.

"You can't just spread false information and in the end add a small disclaimer saying that everything you said may just not be true," Noyb lawyer Joakim Söderberg said.

Noyb European Center for Digital Rights

Noyb European Center for Digital Rights

Hallucinations are one of the main problems computer scientists are trying to solve when it comes to generative AI.

These are when chatbots present false information as facts.

Earlier this year, Apple suspended its Apple Intelligence news summary tool in the UK after it hallucinated false headlines and presented them as real news.

Google's AI Gemini has also fallen foul of hallucination - last year it suggested sticking cheese to pizza using glue, and said geologists recommend humans eat one rock per day.

ChatGPT has changed its model since Mr Holmen's search in August 2024, and now searches current news articles when it looks for relevant information.

Noyb told the BBC Mr Holmen had made a number of searches that day, including putting his brother's name into the chatbot and it produced "multiple different stories that were all incorrect."

They also acknowledged the previous searches could have influenced the answer about his children, but said large language models are a "black box" and OpenAI "doesn't reply to access requests, which makes it impossible to find out more about what exact data is in the system."

.png)

8 months ago

41

8 months ago

41

English (US) ·

English (US) ·